High performance computing – HPC

High performance computing (HPC) systems provide the technical infrastructure for processing particularly complex and computing-intensive tasks.

Supercomputers such as the Vienna Scientific Cluster (VSC), MUSICA (currently under an acceptance test) and the EuroHPC LEONARDO are available to users at the University of Vienna for academic projects that have an extremely high demand for computing power.

Vienna Scientific Cluster (VSC)

The Vienna Scientific Cluster is a collaboration of the Vienna University of Technology (TU Wien), the University of Vienna, the University of Natural Resources and Applied Life Sciences Vienna (BOKU), the Graz University of Technology, the University of Innsbruck and the Johannes Kepler University Linz.

The current configuration level of the supercomputer is the VSC-5.

Using the VSC

Access to the Vienna Scientific Cluster is granted for projects that

- have successfully undergone a peer review procedure and,

- in addition to academic excellence,

- require high performance computing.

If the projects have been positively reviewed by the Austrian Science Fund (FWF), the EU, etc., an additional review procedure is not necessary. The VSC form of the TU Wien is available for the submission of projects and for applications for resources.

In addition, test accounts (temporary and with limited resources) can be assigned quickly and unbureaucratically.

You can find more information about using the VSC at the VSC Wiki.

Users of the University of Vienna

- Users can use access nodes available via

vsc4.vsc.ac.atandvsc5.vsc.ac.at. They are logically part of the University of Vienna's data network. - The connection between the University of Vienna and the TU Wien was physically increased to 10 Gbps. Therefore, large amounts of data can be transferred smoothly between the VSC and the servers at departments of the University of Vienna.

If you have any questions regarding the VSC, please contact service@vsc.ac.at.

VSC-5

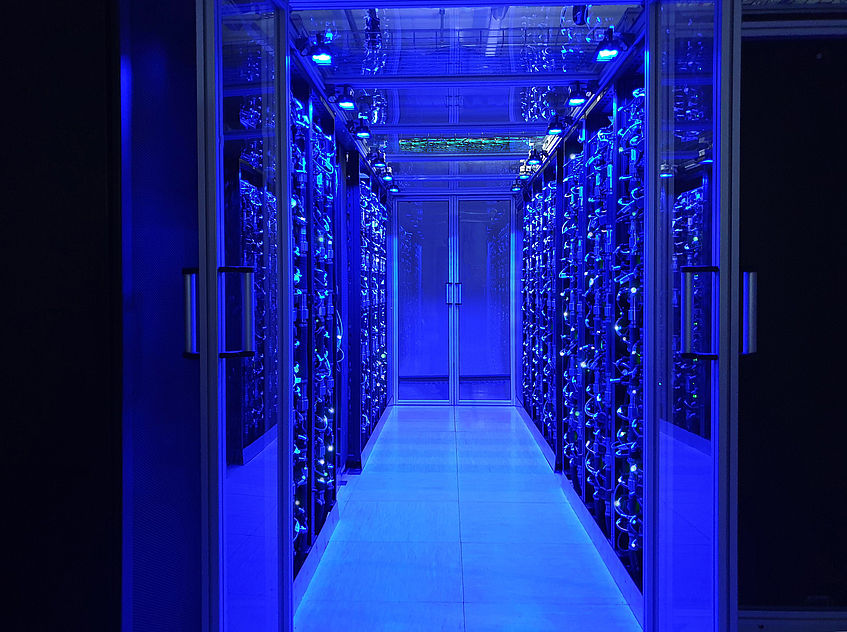

VSC-5 (Vienna Scientific Cluster) © VSC Team

VSC-5

The fifth and current configuration level of the Vienna Scientific Cluster – VSC-5 – is located at the Arsenal and has been in operation since 2022. The VSC-5 ranks

- 301st in the TOP500 list of fastest supercomputers worldwide (as of June 2022).

Hardware and software

- The system contains a total of 98,560 cores and 120 GPUs.

- 770 computing nodes, each with 2 AMD EPYC 7713 processors with 64 cores each.

- Each node has 512 GB of main memory and a 1.92 TB SSD in the basic configuration.

- Of these nodes are:

- 6 reserve nodes

- 120 nodes with extended main memory 1 TB/node

- 20 nodes with extended main memory 2 TB/node

- 60 GPU nodes with 2 NVIDIA A100 GPUs each

- 10 login nodes each with 1 EPYC 7713 CPU with 128 GB.

- 3 service nodes (similarly equipped as login nodes).

- All nodes (computing, login and service nodes) each have a 1.92 TB SSD device via PCI-E 3.0 × 4.

- The service nodes have 4 each and form a private CEPH storage.

- The internal network is 200 Gbit Infiniband with 4:1 overbooking.

- The login nodes each have an additional 25 Gbit Ethernet to the outside.

- The connection to the storage is via 6 × 200 Gbit Infiniband.

- The storage is shared with VSC-4, which is connected with 12 × 100 Gbit Omnipath.

- The system is largely water-cooled.

VSC-4

VSC-4

The next configuration level – the VSC-4 – has been installed at the Arsenal.

The VSC-4 ranked

- 82th in the TOP500 list of fastest supercomputers worldwide

Hardware and software

- Based on Lenovo-DWC-System

- Direct water cooling with up to 50 degrees Celsius in the supply line

- 90 % of the waste heat in water cooling

- a total of 790 nodes consisting of

- 700 nodes with each

- 2 × Intel Platinum 8174 (24 cores, 3,1 GHz)

- 96 GB main memory

- Omnipath Fabric

- Omnipath (100 Gbit) adapter

- 78 fat nodes mit je

- 2 × Intel Platinum 8174 (24 cores, 3,1 GHz)

- 384 GB main memory

- Omnipath Fabric

- Omnipath (100 Gbit) adapter

- 12 very fat nodes with each

- 2 × Intel Platinum 8174 (24 cores, 3,1 GHz)

- 768 GB main memory

- Omnipath Fabric

- Omnipath (100 Gbit) adapter

- 700 nodes with each

Per node

- 2 × Intel Xeon 8174 24 Core 3.1 GHz CPU 240 W

- 12 × 8/32/64 GB TruDDR4 RDIMMS 2666MHz

- 1 × Intel OPA1O0 HFA

- 1 × Intel 480 GB SSD S4510 SATA

- 1 × 1GbE Intel X722

Login nodes

- Lenovo ThinkSystem SR630 Server (1 HE)

- 10 nodes of the type Lenovo SR630 lHE (air-cooled)

- 2 × Intel Xeon 4108 8 Core 1.8 GHz CPU 85 W

- 12 × 16 GB TruDDR4 RDIMMS 2666 MHz

- 1 × Intel OPA1O0 HFA

- 2 × 10 GbE

- 1 × Intel 480 GB SSD 54510 SATA

- 2 × 550W Platinum Hot-Swap power supply

Service nodes

- 2 nodes Lenovo SR630 lHE (air-cooled)

- 2 × Intel Xeon 4108 8 Core 1.8 GHz CPU 85W

- 12 × 16 GB TruDDR4 RDIMMS 2400MHz

- 1 × Intel OPA1O0 HFA

- 4 × 10 GbE

- 1 × RAID 530 8i adapter

- 6 × 600 GB SAS 10k HDD

- 2 × 550W Platinum Hot-Swap power supply

Omnipath (Infiniband) setup

- 27 Edge Intel OPA 100 Series 48 port TOR Switch dual power supply

- 16 Core Intel OPA 100 Series 48 port TOR Switch dual power supply

Ethernet setup

- 27 Edge Intel OPA 100 Series 48 port TOR Switch dual power supply

- 16 Core Intel OPA 100 Series 48 port TOR Switch dual power supply

VSC configuration levels out of operation

For information on previous VSC configuration levels, see VSC configuration levels out of operation.

MUSICA

MUSICA (Multi-Site Computer Austria) is Austria's next supercomputer with networked HPC systems at locations in Innsbruck, Linz and Vienna.

The VSC supercomputers are operated jointly by several universities, but so far at a central location and with online access for all participating institutions. The MUSICA computer hardware, on the other hand, is distributed across several locations – the resulting connection of high performance computing and cloud computing is an innovation of the MUSICA project.

MUSICA will also provide users with significantly more computing power: the fastest supercomputers in Austria to date, VSC-4 and VSC-5, have a combined performance of 5.01 petaflops (computing operations per second). The new HPC cluster will provide a computing power of around 40 petaflops, making it one of the most powerful systems in the world.

The system is currently undergoing an acceptance test.

Hardware

- The Innsbruck and Linz locations respectively have 48 CPU nodes and 80 GPU nodes.

- The Vienna location has 72 CPU nodes and 112 GPU nodes.

Applies to all systems (Vienna, Linz and Innsbruck):

- GPU nodes: 4 x Nvidia H100 94 GB, connected via NVLINK, 2 x AMD Epyc 9654, 96 cores and 768 GB memory, 4 x NDR 200. power consumption: max 4kW

- CPU nodes: 2 x AMD Epyc 9654, 96 cores and 768 GB memory, 1 x NDR 200

- WEKA Storage Server from MEGWARE: 12 NVMe disks with 15, 36 TB each, 2 x NDR400 Infiniband high-speed network

- WEKA® Data Platform: 4 PB all-flash storage solution with up to 1800 GB/s read and 750 GB/s write performance

Cooling:

- largely direct water cooling

- A high cooling water temperature (around 40 °C) makes it possible to reuse the waste heat. In Vienna, for example, ist is used to heat neighbouring buildings.

LEONARDO

The EuroHPC LEONARDO is one of the world's most powerful supercomputers. The Leonardo cluster offers a computing power of almost 250 petaflops and a storage capacity of over 100 petabytes. A share of the computing resources is reserved for users from Austria, who can apply for compute time for their projects via the AURELEO calls for proposals.

Further information can be found on the LEONARDO website.

VSC Research Center

The VSC Research Center was established as part of a project in the framework of structural funds for the higher education area (Hochschulraumstrukturmittelprojekt). It offers a training programme that is targeted at using supercomputers and that supports users in optimising programs. The courses of the VSC Research Center are open to members of the partner universities and also – subject to availability – to external persons.

This project also supports doctoral candidates and postdocs in optimising and documenting highly relevant programs and making them available as open source software.

Further information about courses and the VSC Research Center