Deploying OpenAI model in resource

This user guide will help you to deploy the OpenAI model you want in your OpenAI resource.

Requirement

You have created an OpenAI resource.

Deploying model

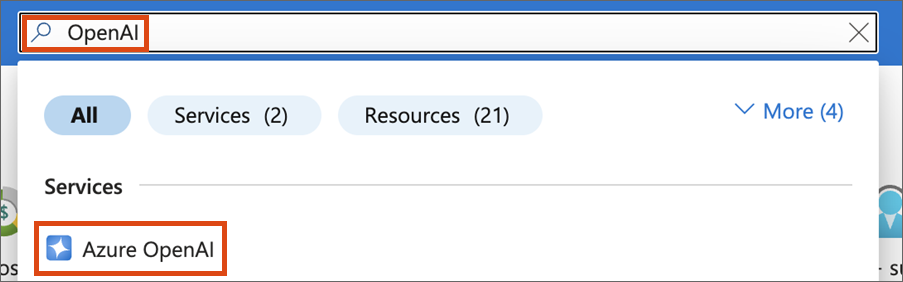

- Open the Azure portal.

- Enter the term OpenAI in the search bar at the top.

- Select the Azure OpenAI service under Services in the search results.

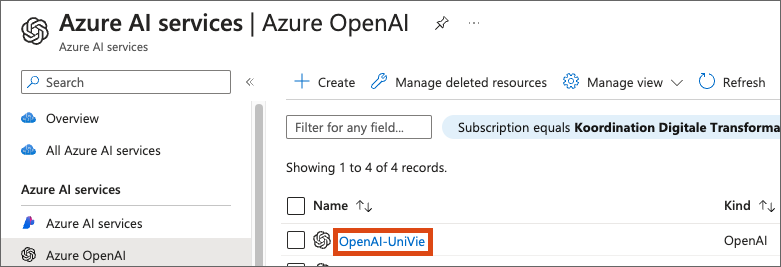

You will find your OpenAI resource in the overview that opens. Click the name of your resource.

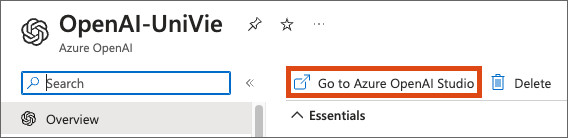

Click Go to Azure AI Foundry Portal.

You will now be redirected to Azure AI Foundry Portal. You may be asked to enter your Azure username and password again.

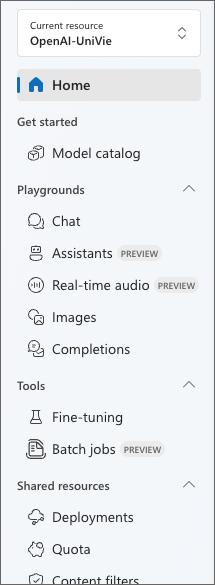

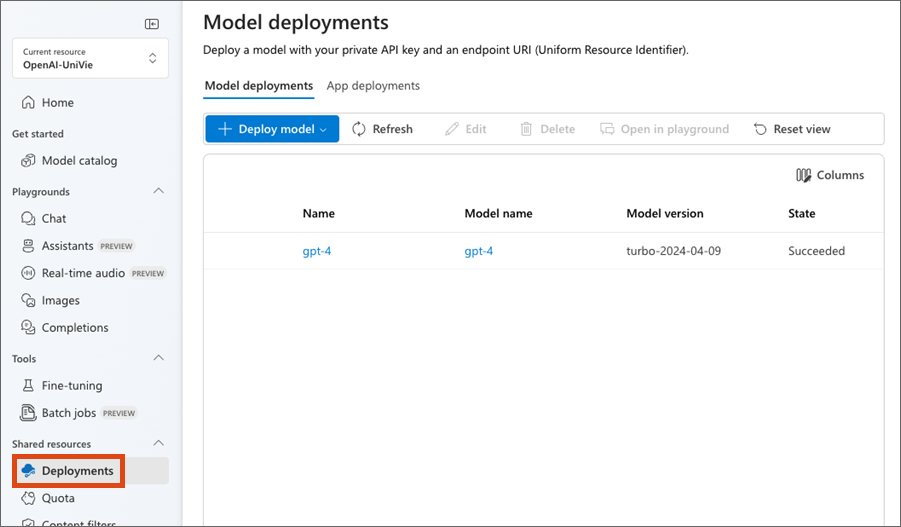

There is a navigation menu on the left-hand side of the Azure AI Foundry Portal.

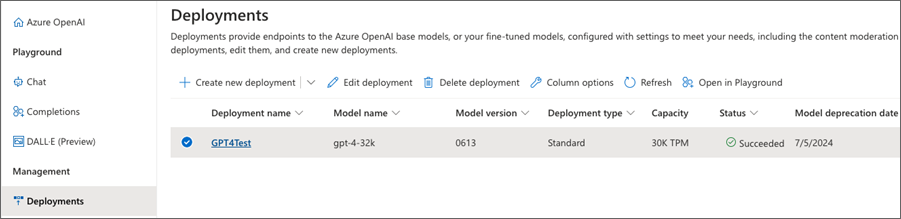

Under Deployments you will find all models that are currently available for your resource.

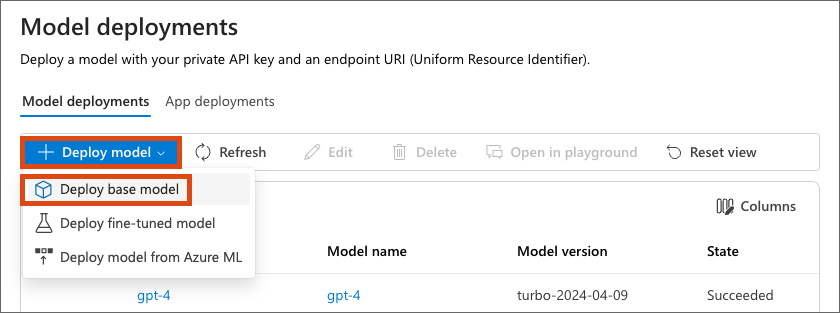

To actually use a model, select + Deploy model and then in the drop-down Deploy base model.

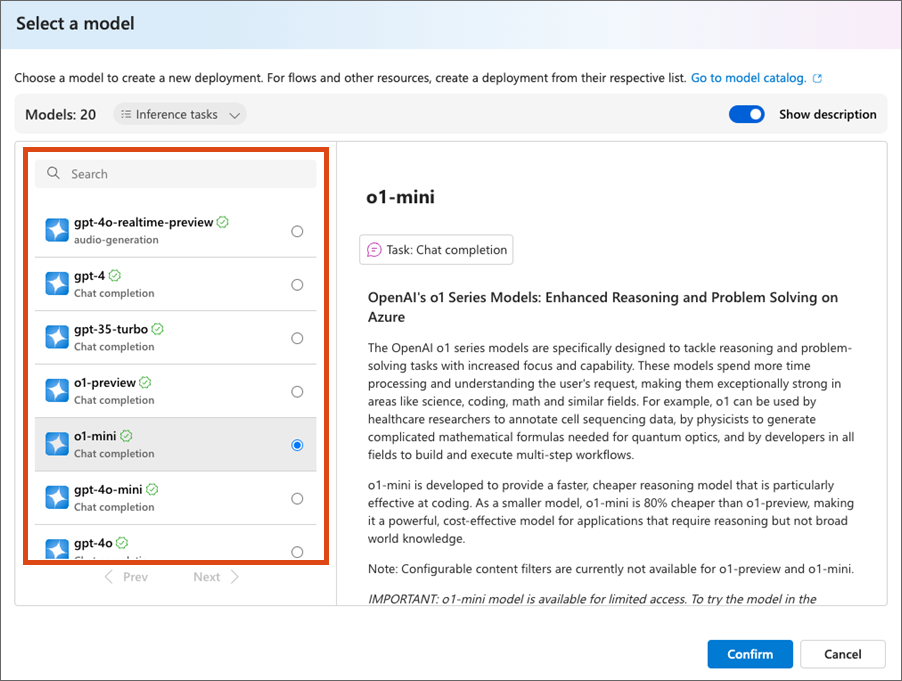

The following configuration menu then opens. Select the desired model on the left.

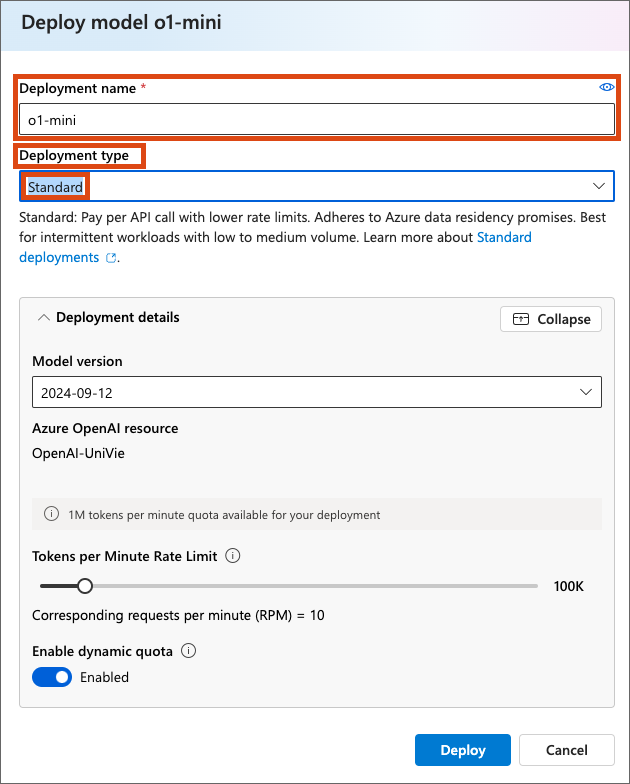

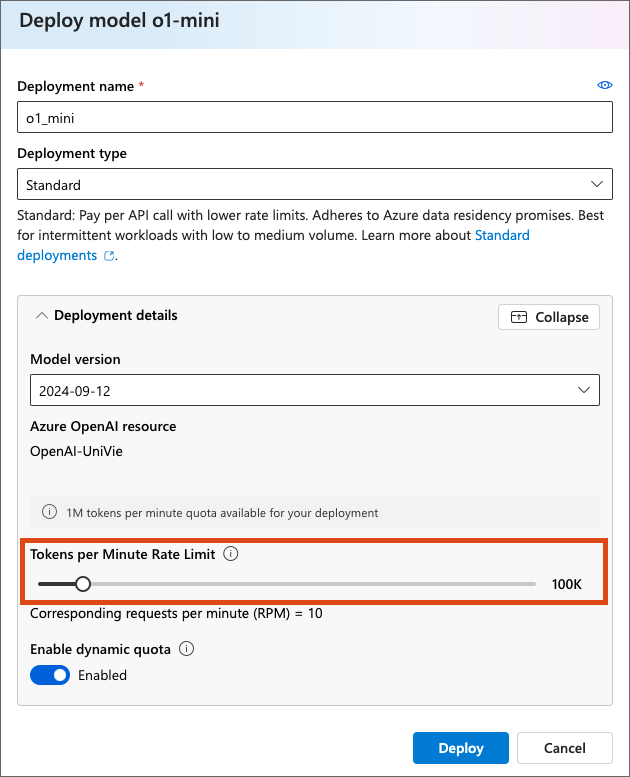

After selecting the model you can assign a deployment name of your choice.

The ZID strongly recommends selecting the Standard option under Deployment type. This is to ensure that data is processed in the selected Azure region within the EU.

If Standard is selected as the Deployment type, as recommended, a further configuration menu opens.

Here you can make further settings, including the maximum number of tokens per minute. This determines the maximum processing rate of the model. The higher the number of tokens, the more requests per minute the model can process. The preset number of tokens should be sufficient for common requirements.

You can find more information about tokens and the available quotas on the Microsoft website.

Once you have made all the settings, click Create. The overview opens with the deployments and the newly created model.

In the next step, you can use the OpenAI model provided.